Last week, in Part 1 of a 2-Parts ODI series, we discussed the vulnerabilities of the data warehouse, particularly when management is lacking knowledge of what jobs are in the Data Warehouse, what the jobs actually do, or how things have been coded.

In Part 2, we will focus on the importance of a project manager when it comes to leveraging ODI for your data warehouse projects.

In Part 2, we will focus on the importance of a project manager when it comes to leveraging ODI for your data warehouse projects.

As consultants, we often come across clients who think of ODI as if it were like every other ETL tool. There is a notion that we can jump right in, hook the technology on the left up to the technology on the right, press a button and walk away. Can you do that with ODI? Absolutely. But let’s look more closely at why you do not want to rush an ODI project and why project management in ODI is important.

Employing a Methodology

Assume you already have ODI packages built and you need additional work completed. The prior developer had not been particularly well organized with regard to the naming of objects built in ODI. You also notice they have hardcoded everything down to the “TO” and “FROM” address in the emails that get sent during the jobs…

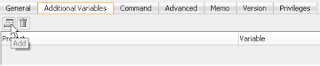

So, how do we get out of this mess? Simply by coming up with a naming standard that can be used as a prefix on objects in ODI it will make it easier to troubleshoot down the road. Adding some variables to the project can make the object reusable and easier to maintain. In the scenario given above, a simple variable for the "TO and "FROM" block of an email notification can save a lot of money and development in the long run for a corporation.

Here at CheckPoint we have come up with a numbering methodology, which is shared with our project managers to keep ODI projects organized. By taking time to look at the whole picture, not just one job at a time, the packages can be rebuilt so they interact, rather than just exist.

By employing this methodology we can immediately identify areas of disparity as well as how to save on future maintenance costs. Helping to keep those "I Don't Knows…" out of your data warehouse like we talked about in Part 1. We require our developers to document as they go so as not to miss any of the details. This also makes future knowledge transfer as smooth as possible.

Here at CheckPoint we have come up with a numbering methodology, which is shared with our project managers to keep ODI projects organized. By taking time to look at the whole picture, not just one job at a time, the packages can be rebuilt so they interact, rather than just exist.

By employing this methodology we can immediately identify areas of disparity as well as how to save on future maintenance costs. Helping to keep those "I Don't Knows…" out of your data warehouse like we talked about in Part 1. We require our developers to document as they go so as not to miss any of the details. This also makes future knowledge transfer as smooth as possible.